Did Facebook finally figure out that consent is more important than nipples?

Publicado el: 28 April 2017

When you receive calls at all hours from women desperate to get intimate photos shared without consent taken offline, it's a relief to hear about Facebook's latest move to address the distribution of non-consensual intimate images. Finally! Technical solutions to social problems seldom make a good fit, even in a digitally layered world, so here is our take on some pluses and limitations of this move, as well as a lot of questions. Will this initiative that attempts to address harm be misused to curb sexual expression, education, or pleasure?

The innovation

Earlier this month, Facebook announced a new tool that will prevent an intimate image posted without consent from being shared further on Facebook, Messenger and Instagram. When an image gets reported by a user, it is then reviewed by Facebook's community operations team. If it is found to be in violation of community standards, it will be removed, and the account that shared the image will likely be disabled. Facebook will then use its photo-matching technology to ensure that the picture is not posted elsewhere online in the participating platforms, and that it cannot be posted again by other accounts. If a photo has been wrongly reported, there is a possibility of appeal.

This same technology, which cross-checks image hash values against a database of flagged photos, is used to limit the distribution of content related to child sexual exploitation and most recently, images portraying violent extremism. It's also used in reverse-image search tools. The hash value is a short, unique string of characters which facilitates speedy searching and identification of a specific photo. These digital fingerprints can be quickly computed, but it's difficult to reconstruct a photo from its hash value, which means that even if someone gets hold of the hash of a sexually explicit photo, they can't regenerate the actual image.

Thumbs up

You don’t have to report every instance of a photo

A photo will no longer need to be reported each and every time it appears on Facebook, nor do you have to know which other accounts have uploaded it. It doesn't matter if the photo has been uploaded a thousand times on Facebook; one report can not only take down the picture, the image's hash value will be used to identify and eliminate duplicates of the photo on Facebook, Messenger and Instagram. A report will still have to be filed for each different photo, but only once. This spells enormous relief for people – most frequently women or LGBTQI persons – trying to control the spread of their intimate photos.

It can stop future uploads of the same photo

As we all know, the creation of multiple accounts to share these photos is a common strategy, especially clone accounts of the person whose photos are being shared without consent. The photo-matching technology will also alert Facebook to a non-consensual image upload in progress and stop it in its tracks.

This raises a question for us, however. A frequent strategy in “sextortion” and other threats (demands for more intimate photos, videos or sexual acts to avoid public distribution of sexually explicit material) is to publish a post and then erase it and/or the account after the target of the threat has seen it. It's a way for the perpetrator to gain greater control over the subject. So, by the time the report is reviewed, the post is gone. Will such photos still make it into the hash system?

Reporting finally won’t seem useless

Women we’ve worked with are desperate because reporting seems futile, especially when they are repeatedly told that a picture “does not violate community standards”. In extreme cases when reporting has not worked, women have succumbed to sextortion in order to get images taken down by the poster. The slut-shaming, revictimisation and sexual harassment that women can experience when reporting such photos (to police and to internet platforms – for example, those who offer intimate photo and video sharing and who charge women for photo take-down) are a further deterrent to reporting. Knowing that anyone can report and that there will be an immediate system scan for the photo once it is identified as non-consensual eliminates the risk and the feeling that reporting won't change anything.

No database of photos

The hash value is what is used for photo-matching, not the actual photo, so this means that intimate images are not stockpiling in a database ripe for hacking or sharing among those with privileged access. This provides further confidence for reporting. Facebook stated that photos are stored in their original format “for a limited time” only. It would be good to know how limited that really is.

Repercussions for posters

In the announcement, Facebook assures us that accounts posting photos identified as non-consensual will likely be disabled. In another news story, the social network refers to permanent deactivation: “Once your account is deactivated for this type of sharing, it's deactivated, says Antigone Davis, Facebook's global head of safety.” This will be heartening for many people, as battling against fake accounts created for the sole purpose of this type of sexual harassment is especially time-consuming and upsetting.

Facebook is taking a stance – sort of

It’s positive to see that Facebook’s emphasis has been on “protecting intimate images” and not “revenge porn” (although media coverage of Facebook’s innovation unfortunately prefers this mistaken concept). If we keep branding the problem as "revenge porn", responses invariably entail calls for abstention, censorship of sexual expression, and victim-blaming, rather than recognising that people's rights to privacy and bodily autonomy have been violated. The decision to deactivate accounts that have uploaded non-consensual images can send a strong message to people about Facebook’s stance on consent and intimate images. People who attempt to upload a flagged photo will get an advisory message that it is in violation of Facebook community standards too. Facebook could go a long way towards educating its user community if it is serious about helping users understand this issue.

Needs more work to get a “like”

Lack of awareness raising

News blasts and platform alerts regarding Facebook’s fake news tool, released just one week later, far surpassed publicity around its new approach to protecting sexually explicit images. Women and others being affected still don’t know this new solution exists, and the Facebook community doesn’t know the platform has a clear position on more than women's nipples.

Warning messages about community standard violations are necessary, but Facebook could also take advantage of a thwarted upload to raise awareness regarding consent criteria. Could Facebook have a targeted public message campaign on sexual rights, expression and consent in partnership with civil society organisations? Or how about a simple ad announcing how to report a non-consensual intimate image?

In some countries, Facebook points people to organisations that can provide support when sexually explicit photos are leaked. Facebook should broaden its resource network to reflect the global geographic and multilingual diversity of its community. It should ensure that recommended organisations defend women’s rights from a human rights framework rather than a moralistic or protectionist point of view which further polices and shames sexuality. Facebook could deepen the collaborative process with the safety round tables initiated in 2016 to help rights organisations raise awareness on its platform about bodily autonomy and why non-consensual distribution of intimate images is a violation against people’s freedoms.

Reporting system is not intuitive

Facebook has had an option to report non-consensual sharing of intimate images for a while – what changes with this innovation is what happens after a report. Unfortunately, it's still not as straightforward as it could be, something we’ve pointed out to Facebook consistently over the years. If you are naked in a photo, and those likes are climbing exponentially, you don’t have time to figure out which rabbit hole of drop-down options you should go down. Most women we accompany immediately choose the logical "I'm in this photo and I don't like it", which only takes you to seemingly petty (by comparison) options about the way your hair looks and letting your buddy know you want the pic taken down. There is no "It's a private sexual image of me being shared without my consent" option there. Why not? Why not include the option to report a non-consensual photo in any logical place a user might go?

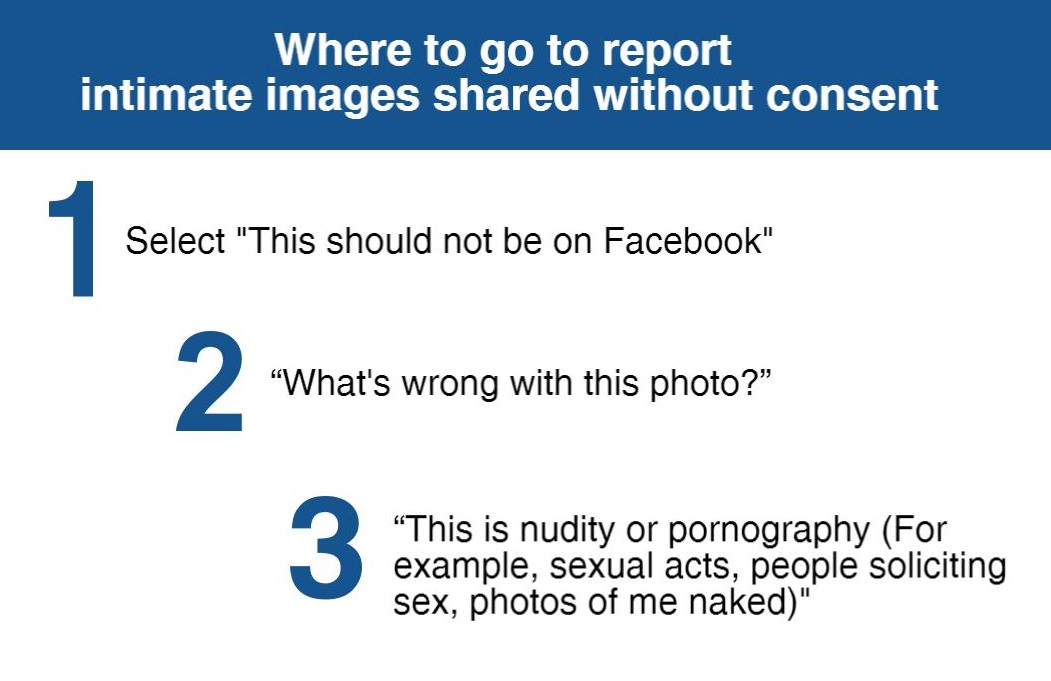

This is something you will like: Where to go to report intimate images shared without consent

So where do you have to go to report? Select "This should not be on Facebook" > “What's wrong with this photo?” > “This is nudity or pornography (for example, sexual acts, people soliciting sex, photos of me naked)". This option is more intuitively selected by a bystander – a Facebook citizen concerned about community standards – than by someone directly affected. You can also use this form to report, even if you are not a Facebook user: https://www.facebook.com/help/contact/567360146613371

Lack of clarity around account banning and creation

It's not clear how long Facebook plans to keep an account deactivated. Will people be banned for life if they have posted intimate content without consent? "Banned for life" may sound extreme, but users can usually create a new account easily. They won't be able to post the same photo, but they can get back on Facebook. The new measure does not address this problem.

It's also not clear if every single account that shared the photo will also be deactivated. What will happen to those accounts that attempt to upload a previously flagged photo? Will Facebook examine other information such as IP or browser fingerprint to determine repeat offenders, similar to Twitter's attempts to curb abusive accounts by comparing phone numbers, or who is being targeted for attack by new accounts? Will repeat offenders face immediate deactivation of new accounts?

Context matters

Leaving such nuanced decision making up to algorithms or artificial intelligence would guarantee disaster. Rather, Facebook cites a team of specially trained community operators will vet the images. Details of what this special training entails remain murky. Minimally, multilingual, multicultural staff trained in understanding gender, sexual rights and expression, victim-blaming and unintentional censorship are needed to properly understand the context in which such photos are being shared and the harm that can result if they are not taken down.

Even with special training, context is everything. What if the photo is non-consensual but not exactly in violation of Facebook’s nudity terms? Is the fact that it is non-consensual sufficient to tag it as a violation? What if it puts the subject at risk, because the image content goes against cultural or societal norms or reveals someone's identity?

Many people post photo teasers that don’t quite violate the Facebook nudity policy, linking off-site to other online spaces after hooking in their Facebook community. What if the user is located in a country where state and community policing of sexuality can put their bodily integrity at risk if such photos are viewed? How will Facebook’s community operators respond in these context-specific situations? Is their ultimate goal with this policy to “prevent harm”, ensure consent, or enforce nudity standards?

Community operators are under pressure to make decisions in the blink of an eye given the amount of reports they must address. With this widespread problem you need more specially trained support team members, not faster decision making.

Accountability

Any decision to take down content and close accounts in a service as broadly used as Facebook demands special considerations around accountability, transparency and appeal. We hope Facebook will share and continue to consult with rights-based organisations about how its criteria for take-down can evolve, the type of training for community operators, and how it will evaluate the effectiveness of this measure. Transparency regarding the number of take-downs, accounts affected, and analysis of the scope of the problem would be invaluable to develop better solutions on Facebook and beyond.

A lot of specific questions arise depending on one's advocacy work. For example, those supporting women facing non-consensual distribution of intimate images want to know more about cooperation with legal proceedings: will relevant information such as the extent of photo duplication and accounts responsible for or attempting distribution still be available if requested by a court order, especially if accounts are being closed and photos eliminated from the system?

Freedom of expression advocates will have a lot of questions about proportional response and monitoring if Facebook has overstepped. For example, how to ensure that an account ban is proportional to the offence? Any woman who's been deluged with harassing comments or lost her job (to name just a few consequences) due to non-consensual sharing of an intimate photo will not question if banning is proportional to this violation of Facebook community standards, but such questions are important to ask from a rights-based point of view. As banning is Facebook's last and most extreme option, how does harm or intent play into account suspension? For example, what if you are a kid randomly sharing sexy photos, possibly without knowing the subjects, versus someone targeting a woman’s employer, colleagues, family, friends, or linking the photo to identifying and locational information? What if a user is tried under some sort of civil law or penal code, pays damages or even serves time – should they never be allowed to have a Facebook account again?

Women's agency

We cannot dismiss that biased enforcement of community standards has translated into violating women's freedom of expression and rights to bodily autonomy in the past. Facebook considers women's bodies as inherently sexual. Although last year Facebook reiterated that breastfeeding or pictures of mastectomies do not fall under its nudity ban, it still regularly censors news in the public interest, pictures of protests, sex and health educational campaigns, and artistic expression.

It is essential to ensure that women's consensual sexual expression is not being censored as a result of these new measures, especially when photos are reported by someone other than the subject (a positive feature but one that can be abused).

The right to appeal

The appeals process is crucial, because if there is one thing women’s rights defenders know, it is that policies made to defend people whose rights are marginalised are frequently taken advantage of by those with power to further marginalise those at risk. We must all be alert to how this system might get played to attack consensual sexual expression and any expression in favour of women’s and LGBTQI rights.

Collaboration

For years Take Back the Tech! and many other women's rights activists have been asking internet intermediaries to take some responsibility regarding the online gender-based violence that their platforms help facilitate, including the distribution of sexually explicit images without consent. We even had to do a campaign about in 2014 – What are you doing to end violence against women?. A key demand was consulting with women's rights activists and women Facebook users, especially those based in the "global South", to gain a deeper understanding of women's realities on Facebook and how the harm they experience has been magnified by the platform's personalised, networked nature and historical lack of privacy by default.

For this innovation, Facebook collaborated with the Cyber Civil Rights Initiative, which has advised survivors and legislators in the US on this issue since 2013. It also finally held safety round table discussions with some participation from women’s rights organisations based in Africa, Asia and to a lesser extent Latin America in 2016. While collaboration does take time it clearly produces valuable, nuanced solutions. If this had been a true priority for Facebook, such a solution could have been viable sooner, especially within Facebook’s own platform.

Non-consensual distribution of intimate images is clearly a problem that is much larger than Facebook. If this measure stays limited to Facebook, people will simply increase this activity on other sites. An important next step will entail cross-platform collaboration throughout the tech industry. Collaboration with rights-based organisations to avoid unintentional censorship and the system being abused to limit LGBTQI and women’s rights, including their right to sexual expression, is also necessary. Non-consensual distribution of intimate images is not a legal violation in most countries and may be a civil rather than criminal offence. It is frequently poorly defined; some countries have even tried to outlaw sexting, the consensual sharing of intimate images. Experiences in assessing violent extremist images and establishing a shared database of hash values, although also controversial, can help inform how this initiative can grow.

If Facebook is able to cultivate a gender-aware, rights-informed support staff who can vet questions of consent and context – not just nudity – when making calls on intimate images, the resulting database will provide extensive credible content of invaluable potential if later pooled in a shared, independent cross-sector database. Such an initiative has to be focused on addressing harm, not curbing sexual expression, education, or pleasure.

SaveSave

- Log in to post comments